When convenience threatens curiosity

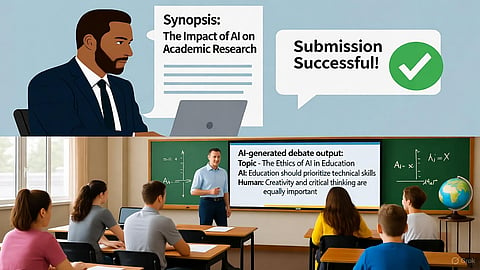

A few months ago, one of my doctoral research scholars submitted a synopsis for his proposed study. The draft was articulate, structured and polished – seemingly ready for submission. Yet, as I began reading, something felt amiss. The arguments were too generic, the citations inconsistent and several references simply did not exist.

A brief conversation revealed the truth: the entire synopsis had been generated by ChatGPT. The scholar had not plagiarised another author – he had simply outsourced his thinking to an algorithm. What seemed like efficiency was, in reality, an erosion of intellectual authenticity.

That moment was more than a revelation – it was a warning. If Artificial Intelligence begins to replace human curiosity with convenience, what happens to the soul of academia and the integrity of learning itself?

The mirage of intelligence: AI dazzles us with its speed and sophistication. It can generate text, solve problems, summarise cases and even simulate insight. But we often forget a fundamental truth: AI has no mind of its own. Its intelligence is entirely derivative – programmed, patterned and limited by the data fed into it.

AI does not think; it predicts. It does not create; it assembles. The fresh, out-of-the-box ideas that can change the world do not emerge from an algorithm – they spring from human imagination, intuition and the willingness to think beyond what is apparent.

The danger, then, is not that AI will outthink us, but that we will stop thinking altogether. As human beings, we are comfort-oriented by nature. The more we rely on instant digital solutions, the less we challenge ourselves to question, to imagine and to reason deeply. What begins as assistance, soon becomes addiction – what I call AI intoxication.

The erosion of higher-order thinking: Education, at its best, is not about acquiring information but cultivating the capacity to think – to analyse, question and synthesise. AI threatens this foundation by short-circuiting the learning process.

When a student uses AI to summarise a complex case or draft a research proposal, the output may look competent, but the cognitive journey – the mental wrestling that produces insight – is lost. The machine delivers an answer, but it denies the learner the struggle that leads to understanding.

This is how creativity begins to erode. Innovation does not come from convenience; it comes from curiosity. When everything is instantly available, the human mind forgets the art of exploration. It can result in a generation of learners, who may appear informed but are intellectually hollow – quick with answers but shallow in thought.

The illusion of insight: This shift is already visible in management education. Students now arrive in classrooms armed with AI-generated analyses, pre-written case solutions and polished essays. The professor’s challenge is no longer to teach content but to rekindle critical thought.

AI may summarise a 30-page Harvard case in seconds, but it cannot perceive the subtleties of context, emotion or ethics that define real leadership decisions. It can identify correlations, but not consequences. It can simulate confidence, but not conviction.

As an educator, I have seen this transition unfold rapidly. Students, who once debated, questioned and defended their ideas, now often defer to what the algorithm suggests. The intellectual dialogue that once animated classrooms risks being replaced by the sterile efficiency of machine outputs.

The cost of over-dependence: The greatest casualty of this dependence is higher-order thinking – the ability to analyse abstract concepts, evaluate complex trade-offs and create original insights. When this mental muscle goes unused, it weakens.

AI thrives on data and cannot function in the absence of data, as it cannot imagine on its own. So, when data is missing, AI can become dysfunctional; only human intuition can work. In situations where no prior information exists – in moments that demand judgment, empathy and vision – the algorithm falters. Human creativity has the proficiency to take over precisely where data ends.

This creative instinct of the human mind, however, gets dulled with every shortcut taken through AI. The mind becomes reactive, rather than reflective. The instant availability of machine-generated responses blocks the very process that defines human intelligence – the ability to think independently. The mind then does not resonate with new situations; it does not respond to challenges

This is why I believe AI intoxication is not merely a technological issue but a psychological one too. When we become addicted to effortless outputs, we lose the patience required for deep thinking. And when that patience disappears, originality dies quietly in the background. When the human mind becomes comfortable with the status quo, it will lack the stimulation to create anything new; and ultimately, complacency and mediocrity will step in.

Let us not forget that AI has been created by the human mind. If that mind becomes complacent and mediocre, there will be no innovation. Even today’s AI will remain as it is today – there will be no development tomorrow.

The ethical paradox for educators: AI also presents a moral dilemma for teachers and institutions. Generative tools can make academic life easier – from preparing lectures and grading assignments to analysing feedback. But ease often comes with erosion. If AI can teach, assess and even mentor, what remains uniquely human about education?

The answer lies not in rejecting AI, but in redefining our relationship with it. AI should augment intellect, not replace it. It should handle repetition, not reflection. As educators, our role is to help students see technology as an instrument, not an intellect – a tool to enhance thinking, not escape it.

This demands a conscious effort to re-humanise learning. Students must be taught to question AI’s assumptions, to identify its biases, and to critique its conclusions. The goal should not be to outsmart the machine, but to outthink it.

Reclaiming the human mind: The challenge extends beyond education. In business and leadership, too, the overuse of AI risks producing decision-makers, who depend on data but lack discernment. Corporates may value efficiency, but in times of crisis, it is judgement – not computation – that drives survival.

AI can optimise, but it cannot empathise. It can analyse patterns, but not values. Leadership, in essence, is a human function. It requires emotional intelligence, ethical awareness, and contextual understanding – qualities no algorithm possesses.

Therefore, as AI becomes central to management practice, business schools must ensure that their graduates retain what machines cannot replicate: the courage to think originally and the wisdom to act ethically.

The new divide: Another growing concern is the AI divide – a hierarchy between those who use AI intelligently and those who use it mechanically merely for shortcuts. Elite institutions with advanced infrastructure can train students to harness AI for innovation, while smaller schools, lacking access and guidance, risk breeding dependency rather than mastery.

If left unchecked, AI can amplify educational inequality – rewarding those with digital privilege and leaving others further behind. True progress lies not in universal access to technology, but in universal capacity for critical thought.

Re-humanising the age of algorithms: AI is not inherently destructive. Like any technology, its impact depends on human intention. The same tool that can generate superficial essays can also assist in research, identify learning gaps and personalise education – if used wisely. The use of fire is one of the greatest inventions for mankind, but it can destroy the world, if left uncontrolled, and so is AI.

The key is balance. Institutions must embed AI ethics and literacy into their core curricula, redesign assessments to measure reasoning rather than regurgitation and invest in faculty development that blends technological competence with philosophical reflection. More importantly, educators and leaders must model intellectual discipline with the clear understanding that while AI can provide answers, wisdom comes from questioning.

Integrity as true intelligence: The day my scholar handed me that AI-generated synopsis, I realised the true threat was not technological but moral. AI will not destroy academia – complacency will. If we allow machines to think for us, we surrender the very essence of being human – the restless curiosity that drives discovery and the moral reasoning that guides action. But, if we harness AI with integrity and awareness, it can become an extraordinary partner in thought rather than a proxy for it.

In the end, intelligence – whether human or artificial – draws its worth not from speed or sophistication, but from integrity. The future of education, and indeed of leadership, depends on our ability to remember this simple truth: AI may assist our minds, but it must never replace our souls. Let us remember that AI is an aid to human endeavour, not a panacea for all human problems.